Mastering Reinforcement Learning for Robotics with Cubix

RL for robotics, covering core concepts, Q-Learning and Deep Q-Learning

Overview

Mastering Reinforcement Learning for Robotics is a course I developed during my time working with The Construct Robotics Institute. This course is designed to provide a comprehensive understanding of Reinforcement Learning (RL) concepts and their application to robotics. Through a highly practical approach, participants gain the skills to design, implement, and evaluate RL algorithms for robotic systems.

Goal

This course is designed to provide you with a comprehensive understanding of Reinforcement Learning (RL) concepts and how to apply them to robotics. By the end of this journey, you’ll have the skills to:

- Design RL algorithms.

- Implement and test RL methods in simulated robotic environments.

- Evaluate RL systems for complex robotic tasks.

Key Features

- Minimal theory with a focus on hands-on learning.

- Structured units that progress from fundamental to advanced RL techniques.

- Step-by-step guidance for building RL pipelines.

Technical Approach

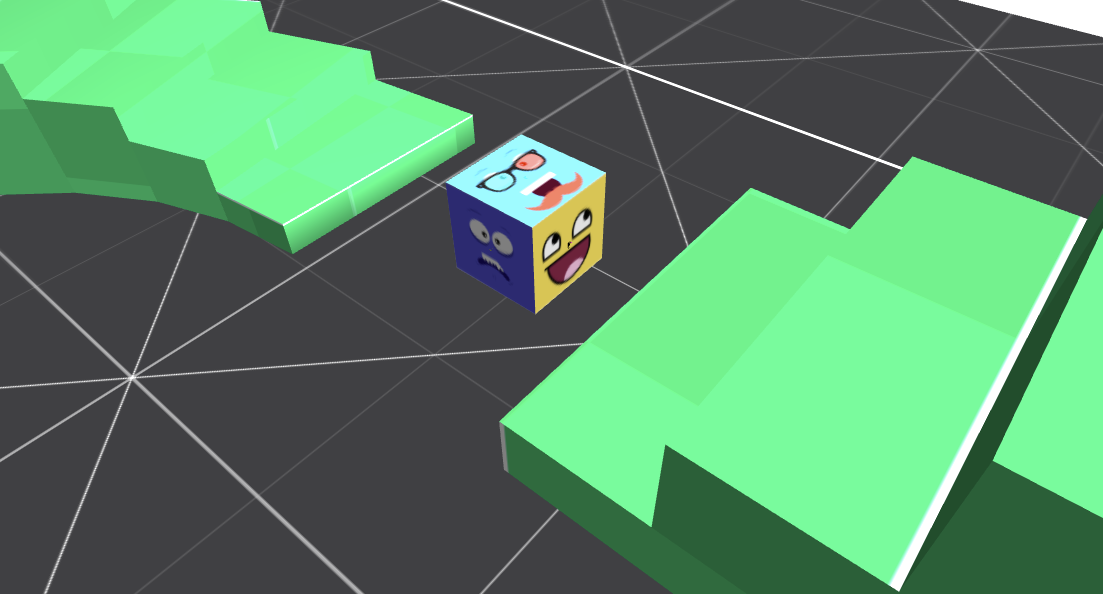

Robot: Cubix

The robot used in this project is Cubix, a versatile, mobile robotic platform designed for experimentation in navigation and control. Cubix operates within a simulated environment and relies on a Force-Torque Controller to move and rotate. This controller sends commands using the geometry_msgs/Wrench topic in ROS 2, enabling precise control over the forces and torques applied to the robot. These inputs allow Cubix to navigate its environment, responding dynamically to its orientation and the goals set within the simulation.

Software

- Framework: ROS 2 Humble.

- Simulation Tools: Gazebo, PyGame.

- Libraries: PyTorch.

What You Will Learn

- Understand the fundamental principles of reinforcement learning, including agents, environments, actions, and rewards.

- Master Q-Learning, a foundational RL algorithm, and implement it for a robotic use case.

- Transition to Deep Q-Learning (DQL) to handle continuous state spaces and more complex robotic tasks.

- Gain hands-on experience implementing RL algorithms for a robotic platform (Cubix) and test them in simulated environments.

Why Reinforcement Learning?

Understanding the Action Space with Cubix

Though at first glance, applying force and torque to Cubix seems straightforward, it becomes quite challenging due to how Cubix responds to these inputs based on its orientation. The same force-torque combination will result in entirely different movements when Cubix is oriented differently. This is because the direction Cubix is facing directly impacts how it interprets and executes the applied forces. In other words, the same action can produce different results depending on how the robot is oriented in space.

Why is it so difficult?

Imagine trying to move Cubix to a specific goal pose. While there are theoretically infinite ways to reach the pose, determining the most efficient and accurate way requires precise control of the Force-Torque Controller. Adding to the complexity, the force and torque must be applied with the correct intensity, timing, and duration to avoid overshooting or unwanted behaviors. Solving this problem manually is not only time-consuming but also impractical for complex tasks.

The RL Solution

Reinforcement Learning offers a robust solution to these challenges. Instead of manually tuning the force-torque controller, RL enables Cubix to learn optimal strategies through trial and error. By receiving feedback from its environment, Cubix discovers which combinations of force and torque are most effective for reaching the goal pose. This approach allows the robot to adapt and improve its performance over time, achieving efficient and reliable navigation without direct manual intervention.

Course Summary

1. Course Intro

- Overview and course objectives.

- Demo: Watch a robot learn to navigate to its goal using RL techniques.

2. Fundamentals of Reinforcement Learning

- Core RL concepts: Agents, environments, states, actions, rewards.

- Understanding policies and the RL loop.

- Exploration vs. exploitation: Finding the balance.

- RL hyperparameters.

This GIF demonstrates Cubix executing its 12 discrete actions:

- Translational: Forward, Backward, Left, Right, Up and Down

- Rotational: Roll Left, Roll Right, Pitch Foward, Pitch Backward, Yaw Left, Yaw Right

3. Q-Learning

- Introduction to Q-Learning and the Bellman Equation.

- Implementing Q-Learning: From environment setup to policy extraction.

- Testing Q-Learning with a simple robot in a simulated grid.

- Hands-on: Applying Q-Learning to Cubix.

Cubix successfully achieves its mission by reaching a target destination using Q-Learning. This video demonstrates the results after training, showcasing Cubix testing the optimal policy it learned over the course of several thousand episodes.

4. Deep Q-Learning (DQL)

- Why transition to DQL? Overcoming the limitations of discrete state spaces.

- DQL Core Concepts.

- Implementing DQL in PyGame: Step-by-step walkthrough.

- Hands-on: Applying Deep Q-Learning to Cubix.

Here, we test a more challenging target destination, and Cubix successfully navigates to its goal by utilizing Deep Q-Learning.

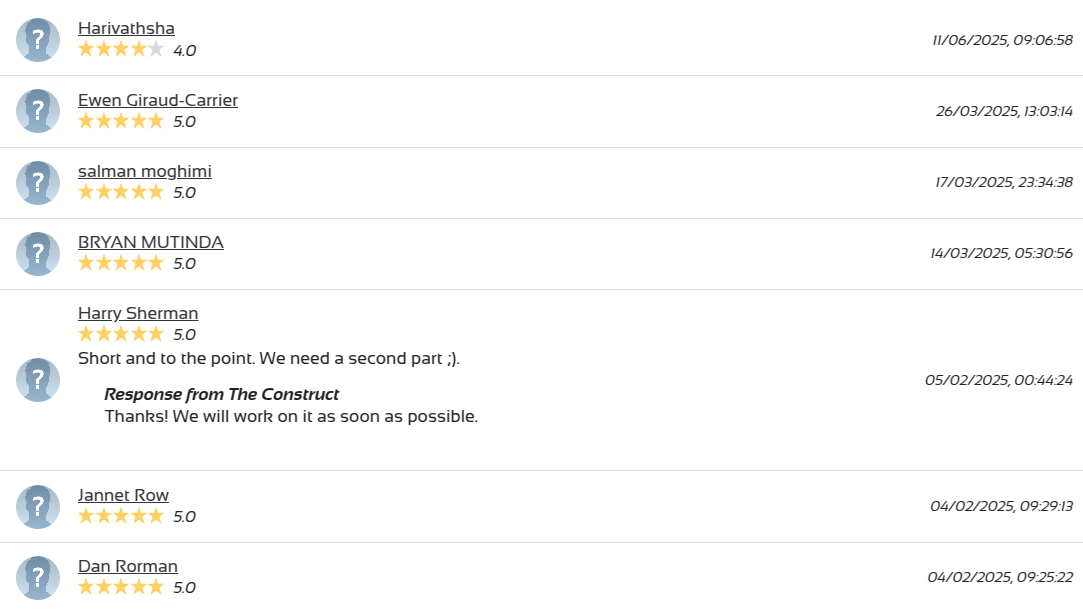

Rating & Testimonials

Access Course

Feel free to access the course here.